Amidst the war, I am holding onto hope for a future of peace and tolerance. At the end of the post I include a few pictures from the safe room (closet). Writing this also made me reflect on related posts from 2017 and 2024.

The Fully Depolarizing Noise Conjecture (2006)

Conjecture (Fully Depolarizing Noise for Bell States).

For every physical implementation of a quantum computer, whenever a Bell state on a pair of physical qubits is created, there exists a small probability  that both qubits are replaced by the maximally mixed state.

that both qubits are replaced by the maximally mixed state.

Equivalently: the preparation of a Bell state (i.e., a two-qubit cat state) on two physical qubits necessarily carries a non-negligible probability that both qubits undergo fully depolarizing noise.

Twenty years ago I proposed that this phenomenon cannot be avoided by any method of preparing a Bell state on a pair of physical qubits. In particular, the conjecture applies to any pair of transmons in a Bell state in superconducting architectures. As far as I know, the conjecture remains open.

What is a Bell state?

A Bell state (also called a two-qubit cat state) is a maximally entangled state of two qubits. A canonical example is

The other Bell states are obtained from this one by local unitary transformations (for example, by applying Pauli operations to one of the qubits).

Bell states represent the simplest and most fundamental form of quantum entanglement. They are the basic resource behind quantum teleportation, entanglement swapping, and many constructions in quantum error correction.

Remark: In its original formulation, the conjecture was stated more broadly: it applied to every entangled pair of physical qubits, not necessarily maximally entangled ones. Moreover, the strength of the correlated depolarizing component was conjectured to depend linearly on the amount of entanglement between the qubits. The discussion below implicitly relies on this more general formulation.

Direct versus indirect creation of entanglement

For directly gated qubits, this behavior is already built into standard stochastic noise models. The novelty of the conjecture is the claim that this remains true with a similar error level even when the cat state is created indirectly. Quantitatively I conjecture that the value of  in the conjecture is in the ballpark of 2-gate fully depolarizing error.

in the conjecture is in the ballpark of 2-gate fully depolarizing error.

For example, one may create a cat state on qubits 1 and 3 by:

- applying a CNOT on (1,2),

- then a CNOT on (2,3),

- and measuring qubit 2 (or uncomputing it).

In standard gate-level noise models, if each two-qubit gate independently depolarizes its participating pair with probability  , then:

, then:

- qubit 1 is replaced with probability

,

,

- qubit 3 is replaced with probability

,

,

-

both are replaced only with probability

.

.

Thus, in such models, the probability that both entangled qubits are hit drops from order  (direct gate) to order

(direct gate) to order  (indirect construction).

(indirect construction).

My conjecture asserts that nature does not permit such quadratic suppression. Even when entanglement is generated indirectly—through mediating qubits, measurement, feedforward, or clever circuit identities—the resulting Bell state on the two physical qubits still carries an intrinsic order- probability that both qubits are replaced by the maximally mixed state.

probability that both qubits are replaced by the maximally mixed state.

Formulating the conjecture for state-of-the-art superconducting devices makes it more concrete. For superconducting quantum computers, the best rate of 2-gate errors is around 0.005 and we can assume that a large fraction of the noise is depolarizing channel hitting both qubits. If the conjecture is correct for indirect entangled qubits, we will be able to identify fully depolarizing errors for pairs of entangled qubits of similar rate rather than two orders of magnitude smaller as suggested by the computation above.

Physical intuition

The conjecture expresses, in a mathematically sharp way, a common physical intuition:

Entanglement between two physical systems requires a genuine physical interaction, and such interaction inevitably exposes both systems to correlated noise.

For example, there is no indirect method of performing an entangling gate between two transmons (say) that is much more reliable than just directly interacting them. Standard gate-level noise modeling does not enforce this. Indeed, in simple circuit models (as we computed above) indirect constructions reduce fully depolarization errors from order  to order

to order  . Thus the conjecture postulates an additional, more subtle “nasty” property of physical noise—one that goes beyond standard local stochastic models. (I was myself recently confused about whether this stronger behavior follows from standard simulations; it does not.)

. Thus the conjecture postulates an additional, more subtle “nasty” property of physical noise—one that goes beyond standard local stochastic models. (I was myself recently confused about whether this stronger behavior follows from standard simulations; it does not.)

Localizable entanglement

Given a quantum state, the localizable entanglement (Verstraete–Popp–Cirac, 2004) of a pair of qubits is defined as the maximum amount of entanglement that can be obtained between them when single-qubit measurements are performed on all remaining qubits and the outcomes are conditioned upon.

The conjecture extends to such localizable entangled pairs: whenever a pair of qubits exhibits non-zero localizable entanglement, there exists a small probability  (depending linearly on the value of the localizable entanglement) that both qubits are replaced by the maximally mixed (maximum-entropy) state.

(depending linearly on the value of the localizable entanglement) that both qubits are replaced by the maximally mixed (maximum-entropy) state.

Implications for larger entangled states

For more complicated entangled states—surface-code states, GHZ states, random-circuit sampling states, and cluster states—the extended conjecture applies to every pair of physical qubits that can exhibit localized entanglement. In several canonical families (such as GHZ states, cluster states, and surface-code states), this includes essentially every pair of qubits.

If true, this would have severe consequences for quantum error correction: correlated depolarizing noise on pairs of qubits is far more damaging than the quasi-independent noise assumed in threshold theorems. The reason is that a noise channel in which the events “qubit  is depolarized” and “qubit

is depolarized” and “qubit  is depolarized” are positively correlated for all pairs

is depolarized” are positively correlated for all pairs  necessarily leads to large-scale error synchronization.

necessarily leads to large-scale error synchronization.

The state of the conjecture

As far as I know, the conjecture remains open.

Current NISQ-scale devices could in principle test it experimentally—even at noise levels above the fault-tolerance threshold. The challenge is not the scale, but the identification of the specific correlated fully-depolarizing component in the noise.

Recent demonstrations of distance-3, distance-5, and even distance-7 surface codes appear, at least superficially, to be in tension with the conjecture. Whether this tension is genuine or only apparent deserves careful examination. It will also be interesting to examine the situation for experimentally realized “large” GHZ states.

I look forward to the conjecture being carefully tested—and, of course, possibly refuted.

Motivation

The original motivation for the conjecture was to “reverse engineer” natural structural conditions on noise that would cause quantum fault tolerance to fail.

For this reason, I did not view the conjecture itself as a reason for people to revise their a priori beliefs about quantum computers. Rather, I regarded it as a concrete and testable benchmark for quantum devices — one that is meaningful both for small systems with only a handful of qubits and for larger systems. The conjecture is relevant even to systems operating at noise levels above the threshold required for fault tolerance.

Sources

In March 2006 I raised an early version of the conjecture on Dave Bacon’s blog and discussed it with Dave Bacon, John Sidles, and others. The conjecture later appeared as Postulate 1 in my 2006 paper How quantum computers can fail and in several subsequent works. The related “noise synchronization” consequence for highly entangled states appeared as Postulate 2 and it connects back to my 2005 paper.

These postulates became Conjecture 3 and Conjecture 4 in my 2012 debate with Aram Harrow, where they played a central role. Conjectures 3 and 4 from the debate and a further consequence for the error rate (in terms of qubit errors) are Predictions 1,2, and 3 in my 2016 paper “The quantum computer Puzzle“.

Remark: In my papers I struggled to formulate the conjecture precisely and to identify the most appropriate notion of entanglement. Formulating the conjecture for localized entanglement (which I referred to as emergent entanglement) was proposed in my 2009 paper “Quantum computers: Noise propagation and adversarial noise models.“

I also considered a weaker notion where the maximum amount of entanglement over single-qubit measurements is replaced by the average entanglement obtained from such measurements.

In some early papers I also formulated related conjectures for correlated classical noisy systems, asserting that correlation for the “signal” implies correlation for the noise. In this generality classical computation provides simple counterexamples. Nevertheless, suitably adjusted versions of the conjecture appear to apply to several natural physical systems.

Some counter arguments (and responses)

1) “This may be true for physical qubits, but it is false for logical qubits.”

Yes — the conjecture refers to physical qubits.

If the conjecture holds, it lowers the prospects for achieving reliable logical qubits. In fact, good-quality logical qubits would violate the Fully Depolarizing Noise Conjecture at the physical level. Thus the conjecture asserts that sufficiently good logical qubits are out of reach because the necessary physical behavior is unavailable.

2) “The conjecture does not describe a legitimate quantum channel (namely a channel supported by quantum mechanics).”

The conjecture does not specify a complete noise model. It imposes constraints on whatever the correct physical noise model is.

Even if universally true, the physical mechanisms leading to the conjectured behavior may differ between implementations. The conjecture is structural, not mechanistic.

3) “The conjecture violates linearity of QM. It is possible that it will apply to one initial state of your quantum computer but not to another one.”

This is incorrect (while interesting). As I wrote above, the conjecture proposes constraints on the noise model rather than a complete description. If for the same circuit, with one initial condition the conjecture applies and for another initial condition the conjecture does not apply, this does not violate linearity. Instead, it implies that if preparing these initial conditions makes no difference for the noise, then correlated depolarizing errors will appear in both cases.

4) The noise (or Nature) cannot “know” if the state is entangled or not. Entanglement cannot cause correlations for the noise.

The conjecture does not assume that noise “detects” entanglement or that entanglement directly “causes” correlation. It asserts that the physical processes required to generate entanglement inevitably produce correlated noise.

5) “The conjectured noise resembles nonphysical random-unitary models.”

Even if certain effective noise models implied by the conjecture appear unphysical, that does not refute the conjecture. It may instead indicate that certain large-scale entangled states are themselves physically unrealizable.

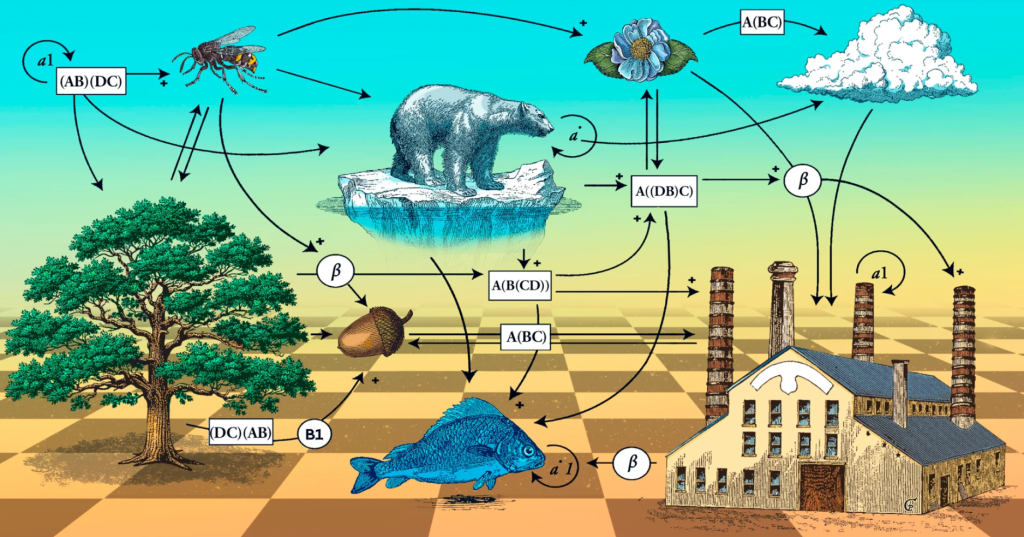

If creating a distance-15 surface code necessarily produces nonphysical types of noise, that would suggest that building such a code is itself nonphysical. The figure in my 2009 paper When Noise Accumulates was meant to illustrate precisely this point

6) “The threshold theorem extends to general long-range noise.”

Yes — but these extensions still violate the conjecture. Mathematically speaking, many small long-range interaction terms are not the same as imposing substantial correlated depolarization.

7) “Entanglement is basis dependent.”

The conjecture refers to correlations in a fixed computational basis. There is no ambiguity here.

8) “Empirical independence in random circuit sampling shows that there are no unexpected nasty aspects of quantum noise.

No.

Fidelity measures (including XEB) primarily estimate the probability that no error occurred. They are not sensitive to correlated structure among the error events that do occur.

The conjecture concerns correlations between qubits conditioned on noise events, not the global fidelity of outputs.

9) If the error rate is  , the conjecture implies

, the conjecture implies  errors per round. (We expect

errors per round. (We expect  errors per round.)”

errors per round.)”

This observation captures an important feature of the conjecture.

In trace-distance terms, the total noise per round may scale linearly.

But in terms of qubit-error counts, error synchronization can produce quadratic scaling  in the number of correlated qubit hits.

in the number of correlated qubit hits.

This quadratic synchronization is precisely the phenomenon the conjecture predicts.

10) The conjecture is too vague; it does not explicitly describe the noise channel. It also does not describe the physical source of the noise and its exact modeling.

This is partially true. The conjecture is structural and not mechanistic (see further explanation below).

Testing the conjecture experimentally would require identifying in experimental data specific correlated fully-depolarizing components. Supporting it theoretically would require fine-grained modeling of concrete physical systems.

11) As long as t > 0 is unspecified, then the conjecture might always stay open.

I conjecture that t is in the ballpark of the rate of 2-gate fully depolarizing error.

In contrast, (to the best of my memory) for fault tolerance quantum computers with  physical qubits, for most pairs of qubits

physical qubits, for most pairs of qubits  , the correlation between the events “qubit

, the correlation between the events “qubit  is hit by a depolarizing error” and “qubit

is hit by a depolarizing error” and “qubit  is hit by a depolarizing error” tends to zero as

is hit by a depolarizing error” tends to zero as  tends to infinity.

tends to infinity.

12) The correlation conjecture (and my earlier line of research from 2005) has no direct bearing on topological quantum computing.

Right.

13) The conjecture represents the “physical-noise-is-conspiring position”. However, Nature is not malicious.

We will see about that  .

.

Remarks:

(to items 3,4) The possibility for systematic relation between the noiseless state and the noise was raised and discussed in my 2005 paper and through the years has led to interesting heated discussions.

(to item 5) noise models which resemble the behavior of random unitary operator were offered by early skeptical views. They do not violate the postulates of quantum mechanics but are considered unphysical: they violate how quantum physics is believed to be mapped into quantum mechanics.

(to item 6) Preskill’s 2012 paper (partially triggered by our 2011 Caltech discussion) presented general conditions for the threshold theorem to hold and cites earlier papers in this direction. Robert Alicki opined that the conditions proposed by Preskill are violated for open quantum systems, and I explained in this comment a specific feature of Preskill’s very general noise models that I find physically questionable.

Gated qubits and “purifying” gate errors

The assertion of the conjecture for gated qubits is (a rather vanilla) part of the standard model of noise for noisy quantum circuits and it is also clearly manifested by experimental data. The negation of the conjecture would mean the ability to create entangled pairs of transmons (or other physical qubits) without any fully depolarizing errors. In effect, this would amount to “purifying” the two-qubit gate used to generate entanglement: starting with two-qubit gates that include fully depolarizing noise at rate  , one could nevertheless produce physical entangled pairs with no fully depolarizing component on the pair itself. The conjecture asserts that such purification is not physically possible.

, one could nevertheless produce physical entangled pairs with no fully depolarizing component on the pair itself. The conjecture asserts that such purification is not physically possible.

The conjecture is structural and not mechanistic

Some of the objections listed above (especially, 2,3,4,10) treat the conjecture as if it were proposing a specific mechanistic claim of the form: “Here is the physical source of the noise — e.g., microscopic Hamiltonian couplings, thermal photons, leakage, cross-talk —

and here is the dynamical derivation showing why fully depolarizing correlations appear. Such a claim would specify, the environment, the interaction model, the time evolution, and the exact channel arising from tracing out the bath. My conjecture is a structural claim of the form

Whenever two physical qubits can be prepared (or projected) into a Bell state, there is an intrinsic order- fully depolarizing component acting jointly on them.

fully depolarizing component acting jointly on them.

This is a statement about the form of the effective channel, not about the physical process generating it. Of course, mechanistic explanations (that may differ for different implementations) that support the conjecture could be valuable.

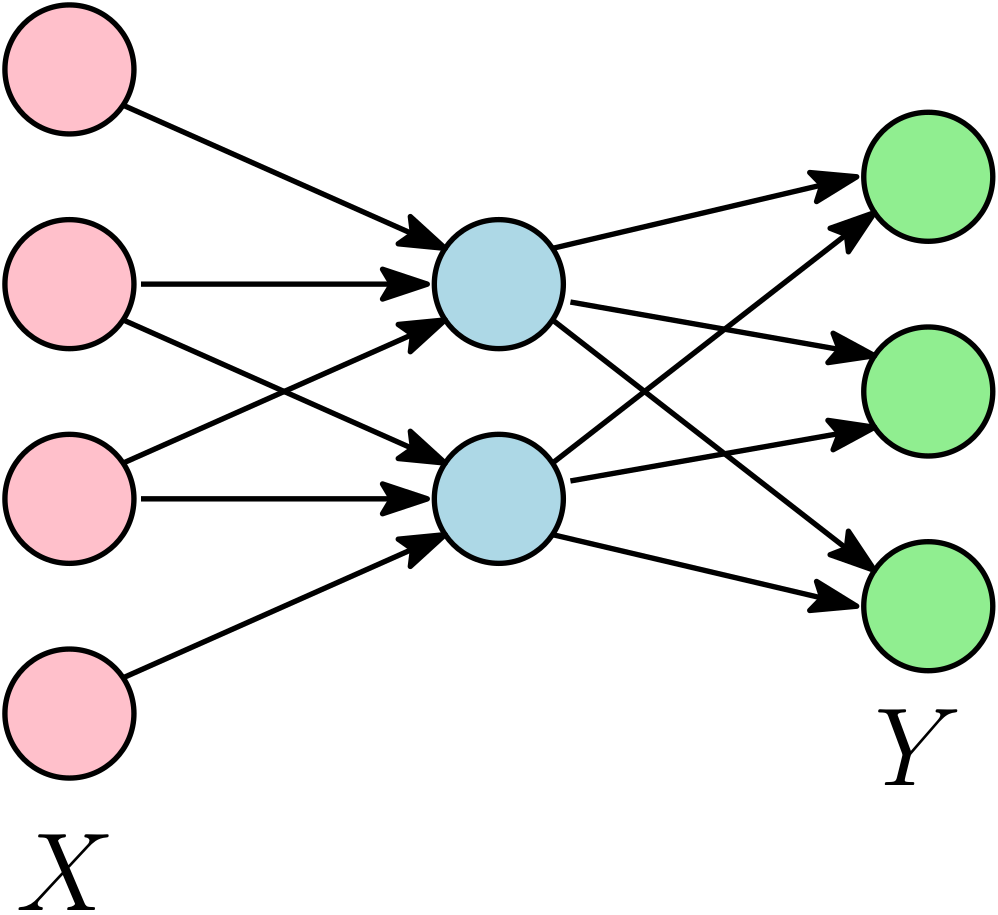

A Simple Probabilistic Model: Tail Bounds from Pairwise Marginals

To illustrate how strong pairwise correlations can force even large-scale error synchronization, consider the following simple probabilistic model. There is a probability distribution  on

on  . We generate a random bitstring

. We generate a random bitstring  according to the distribution

according to the distribution  . If

. If  we fully depolarize qubit

we fully depolarize qubit  .

.

Let  be a random 0–1 vector of length

be a random 0–1 vector of length  , and write

, and write  . We assume that the joint distribution of every pair

. We assume that the joint distribution of every pair  (for

(for  ) is fixed. The goal is to minimize the upper tail probability

) is fixed. The goal is to minimize the upper tail probability  .

.

Theorem (symmetric “one-parameter” pair law): Assume that for every  ,

,

where ![t\in[0,1]](https://s0.wp.com/latex.php?latex=t%5Cin%5B0%2C1%5D&bg=ffffff&fg=333333&s=0&c=20201002) . Let

. Let  and

and  . Then the minimum possible value of

. Then the minimum possible value of  over all such distributions equals

over all such distributions equals

![\displaystyle \min \Pr(S\ge K)= \begin{cases} \frac{4t}{3}\cdot\frac{n}{n+1}, & \text{if } K \le \frac{n+1}{2},\\[6pt] 0, & \text{if } K > \frac{n+1}{2}. \end{cases}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cmin+%5CPr%28S%5Cge+K%29%3D+%5Cbegin%7Bcases%7D+%5Cfrac%7B4t%7D%7B3%7D%5Ccdot%5Cfrac%7Bn%7D%7Bn%2B1%7D%2C+%26+%5Ctext%7Bif+%7D+K+%5Cle+%5Cfrac%7Bn%2B1%7D%7B2%7D%2C%5C%5C%5B6pt%5D+0%2C+%26+%5Ctext%7Bif+%7D+K+%3E+%5Cfrac%7Bn%2B1%7D%7B2%7D.+%5Cend%7Bcases%7D+&bg=ffffff&fg=333333&s=0&c=20201002)

(In particular, for large  this is asymptotically

this is asymptotically  for

for  and

and  for

for  .)

.)

A proof and discussion of this implication will be given separately.

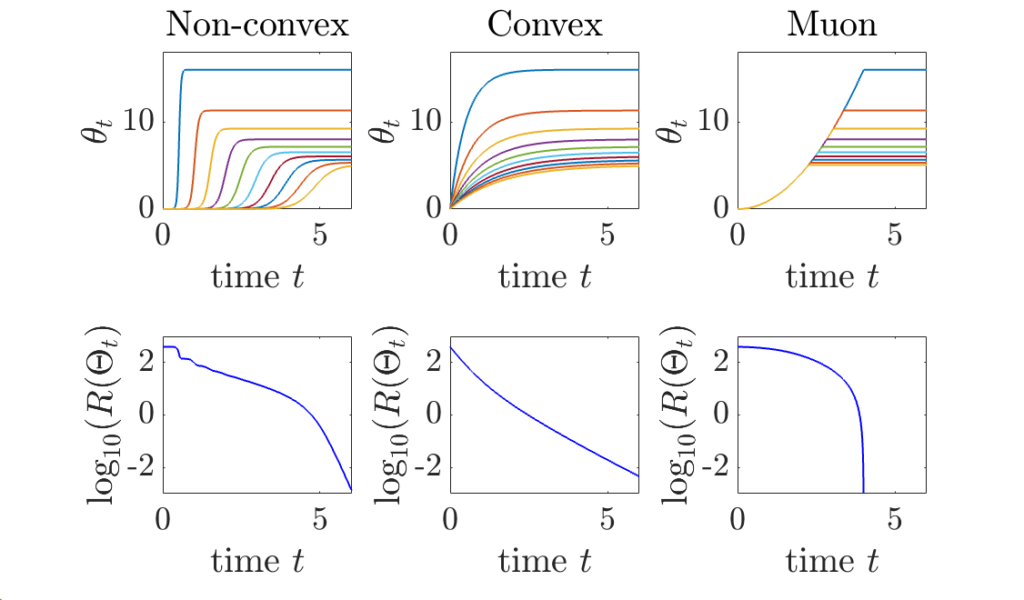

Time-parametrization and smooth Lindblad evolutions

My conjecture asserts that for noisy quantum states and evolutions — when the noise level is low — there are systematic relations between the noise and the corresponding “ideal” state. (Such systematic relations were already explored in my 2005 paper.) For example, the noise in a quantum computation may reflect symmetries and statistical features of the underlying signal.

Over the years, I made several attempts to place these ideas into a broader mathematical framework, extending beyond the specific (and hypothetical) setting of quantum computers. One direction I proposed was the study of certain subclasses of Lindblad evolutions, which I referred to as smoothed Lindblad evolutions. Another direction involved introducing an intrinsic time-parametrization for noisy quantum evolutions. These ideas appear as Predictions 6 and 7 in my 2016 Notices of the AMS paper.

Both directions aim at understanding noise not as arbitrary perturbation, but as a structured phenomenon constrained by the evolving quantum state.

My paper and discussion with Greg Kuperberg.

One offshoot of these conjectures — particularly the notion of smoothed Lindblad evolutions — and long email discussions with Greg Kuperberg led to a joint paper: Contagious error sources would need time travel to prevent quantum computation. Following ideas of Emanuel Knill, we proved that fault tolerance is possible even under very general forms of “disease-like” spreading noise.

Greg viewed this paper as a successful response to my skepticism. I did not quite see it that way. I saw our paper as clarifying an important point: if time-smoothing in my proposed class of Lindblad evolutions applies only forward in time, then fault tolerance can still succeed. To obstruct fault tolerance, the smoothing would need to extend to both past and future — effectively requiring a kind of “time-travel” correlation structure.

Recently, Greg jokingly proposed a joint mathematical collaboration (not necessarily on quantum topics) involving the two of us together with Gal Kronenberg and Guy Kindler. I think this is a wonderful idea.

My argument against QC (2013-2020), and other related directions.

Between 2013 and 2020, I pursued a different skeptical direction regarding quantum computation. Together with Guy Kindler (initially in the context of Boson Sampling), I developed an argument based on computational complexity and the notion of noise sensitivity. This line of work suggests that NISQ devices cannot achieve “quantum supremacy.”

Unlike the Fully Depolarizing Noise Conjecture — which posits a structurally “nasty” type of correlated noise beyond standard modeling — this later argument relies entirely on standard noise assumptions. It explains why the extremely low error rates required for quantum supremacy and scalable fault tolerance may be beyond reach.

Naturally, the quantum-supremacy claims of the past six years directly challenge this position. The central issue is careful evaluation of experimental details. As far as I know, those claims have no direct bearing on the Fully Depolarizing Noise Conjecture.

Both skeptical directions generate near-term experimental predictions. Both are discussed in my 2016 paper “The quantum computer Puzzle“. While experimental validation is the primary testing ground, another possible avenue is to derive broader physical consequences beyond the specific framework of quantum computing.

Let me also note that the question of whether “NISQ computers” could deliver “quantum supremacy” arose in my debate with Aram Harrow — and in other discussions — before the terms “NISQ” and “quantum supremacy” were coined.

Statistical testing

Since 2019, I have also been working (with Yosi Rinott, Tomer Shoham, and Carsten Voelkmann) on developing statistical tools for analyzing samples produced by NISQ experiments. Engaging directly with experimental data and statistical methodology may prove useful for testing the Fully Depolarizing Noise Conjecture as well.

One possible approach is to begin with a standard noise model, augment it with the hypothetical two-qubit depolarizing component (DEPOLARIZE2) predicted by the conjecture at some rate  , and then determine the value of

, and then determine the value of  that best matches empirical data. Here DEPOLARIZE2 refers to a two-qubit depolarizing channel acting jointly on the pair.

that best matches empirical data. Here DEPOLARIZE2 refers to a two-qubit depolarizing channel acting jointly on the pair.

I recently had useful discussions with Craig Gidney about this type of simulation. Craig is optimistic that the skeptical position will be decisively refuted in the coming years. Statistical fitting of experimental samples to classes of  -noise models (discussed earlier) may also provide empirical tests of the conjecture.

-noise models (discussed earlier) may also provide empirical tests of the conjecture.

Conclusion

The Fully Depolarizing Noise Conjecture was proposed twenty years ago as a structural stress test for quantum computing — a condition that scalable quantum devices must overcome. It does not attempt to describe a specific microscopic mechanism. Rather, it imposes a constraint on the effective noise channel: whenever physical qubits can generate entanglement — or localizable entanglement — correlated fully depolarizing noise must appear at linear order.

Whether this structural constraint reflects a fundamental limitation of quantum devices, or whether it will ultimately be refuted by experiment, remains an open question. The answer lies mainly in precise experimental scrutiny together with careful theoretical modeling and analysis.

A few pictures from the safe room (closet).

Late remark: There is a lovely interview of Scott Aaronson with Yuval Boger of QuEra. Yuval asked: “I’ve heard you refer many times in your talks to an argument with Gil Kalai about whether large-scale quantum computing is even possible. Do you think he still has a path to being vindicated, or is it over?” Scott gave a detailed and nice answer presenting his view of my position. (See also this SO post.) The discussion nicely illustrates how this debate continues to evolve twenty years after the Fully Depolarizing Noise Conjecture was first proposed.

By Gil Kalai

.

.